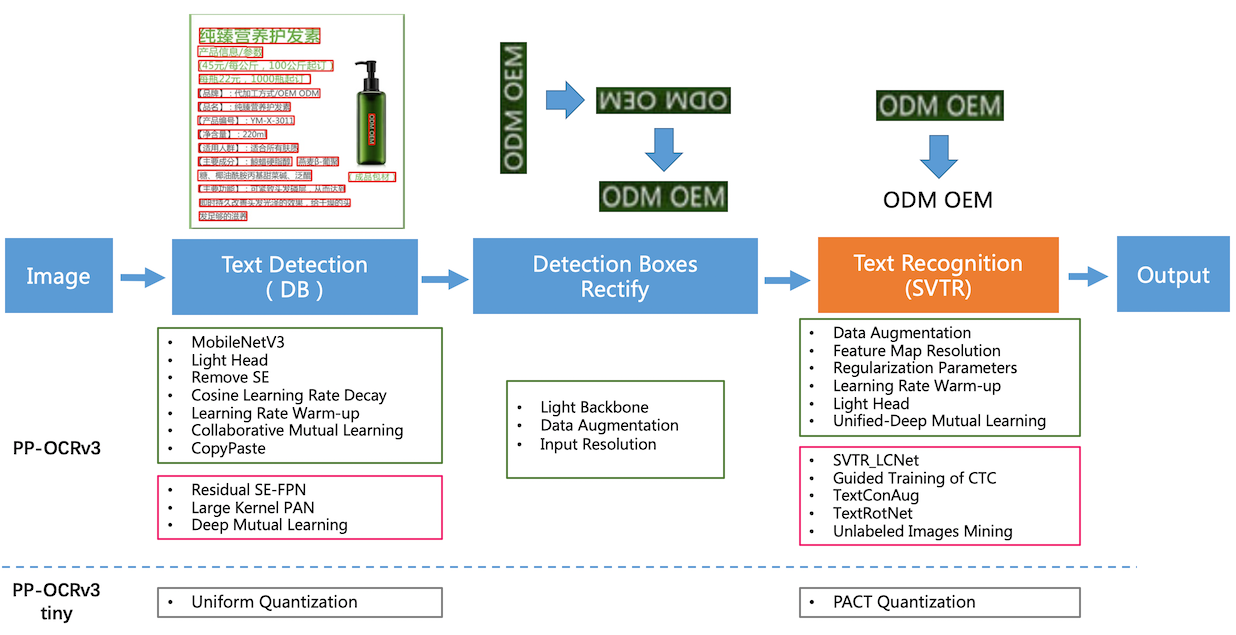

OCR Pipeline

Abstract

We have utilized PaddleOCR, an Open Source framework for Optical Character Recognition, based on the PaddlePaddle Open Source Deep Learning framework and suite of solutions, developed by Baidu. We use a variant of the PP-OCRv3 model, which is quantized and employs knowledge distillation to reduce size while upholding accuracy.

Metrics

PP-OCRv3 has a reported accuracy of 79.4%. With the dataset selected, we have experienced an accuracy of ~85%, when checking for the fields Invoice ID, Total Amount, Vendor Name and Date.

The inference time on a Colab T4 GPU is on an average, about 0.2s/image.

Note that the first time the model is run, it takes about 1s extra for the first image.

Review of other open source OCRs

1. Tesseract

We attempted to use tesseract to extract information from images. Tesseract failed to recognize handwritten data, and often failed at numbers, which were essential to our task.

2. KerasOCR

The accuracy for KerasOCR, and the speed were both found to be worse when compared to

3. EasyOCR

EasyOCR, while being easy to implement and giving better results than Tesseract and KerasOCR, was found to be slower than Paddle, when inference times were compared on an image.

4. docTR

docTR also performed well accuracy-wise, but was slower by a factor of 2, when compared to PP-OCRv3-slim. Because of the importance of inference time in the performance calculation, we chose PaddleOCR over it.

After testing all these, PaddleOCR was found to be the most suitable for our project. An additional advantage to using Paddle was the flexibility it provided in various model versions (v3, v4, v3-slim etc.)

About PP-OCRv3-slim

The PP-OCRv3 model (and the other models in PP-OCR) consists of two separate models for Text Detection and Text Recognition. The slim model specifically involves quantization, along with knowledge distillation.

Text Detection (v3-det)

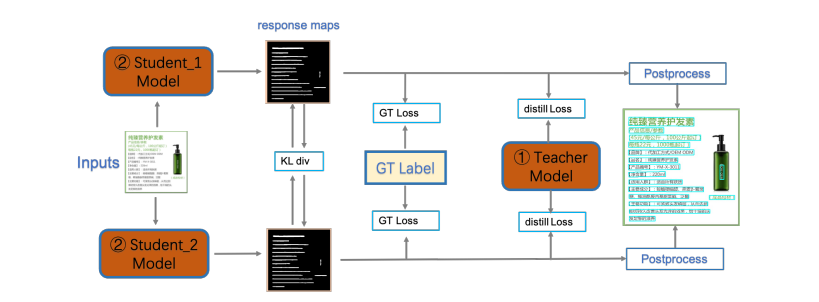

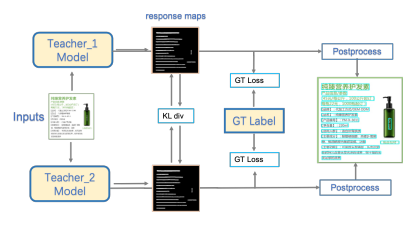

The PP-OCRv3 text detection model uses the Collaborative Mutual Learning (CML) distillation strategy, which combines a traditional Teacher-Student model approach with Deep Mutual Learning (DML), where multiple Student models learn from each other. In PP-OCRv3, both the Teacher and Student models are optimized for better text detection performance.

The PP-OCRv3 text detection model uses the Collaborative Mutual Learning (CML) distillation strategy, which combines a traditional Teacher-Student model approach with Deep Mutual Learning (DML), where multiple Student models learn from each other. In PP-OCRv3, both the Teacher and Student models are optimized for better text detection performance.

Key Components:

-

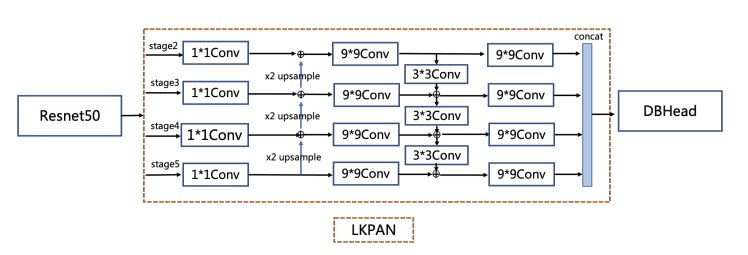

LK-PAN (Large Kernel Path Aggregation Network)

LK-PAN enhances the Teacher model by increasing the convolution kernel size in the PAN module to 9x9, expanding the receptive field. This helps in detecting larger text and text with extreme aspect ratios. -

DML (Deep Mutual Learning)

DML is used in the Teacher model, where two models with the same architecture learn from each other, improving their overall accuracy. -

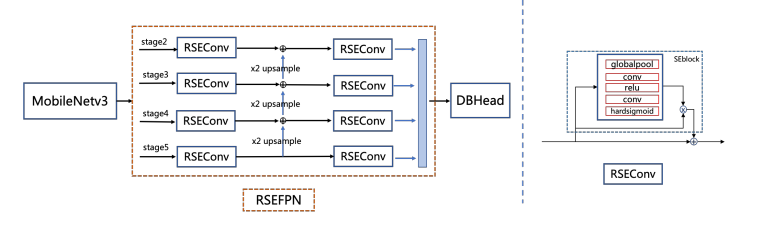

RSE-FPN (Residual Squeeze-and-Excitation Feature Pyramid Network)

RSE-FPN improves the Student model by combining a Squeeze-and-Excitation (SE) Block with a Residual Structure. This helps the model focus on important features and maintain information flow, crucial for detecting small or complex text.

Text Recognition

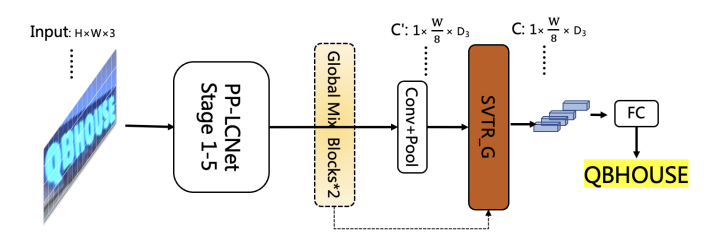

The text recognition pipeline is inspired from the algorithm SVTR (Single Visual model for Scene Text Recognition), and works on it to increase the speed of the model.

-

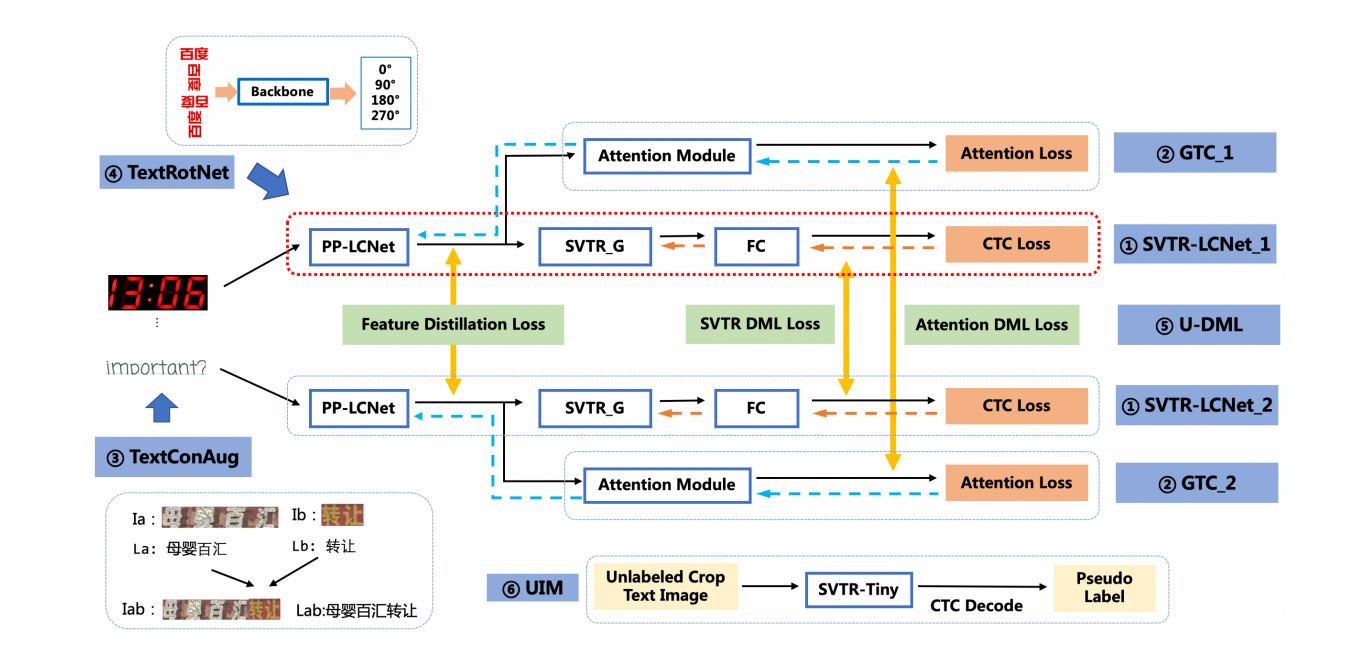

SVTR-LCNet (SVTR Lightweight Convolutional Network)

This model is a lighter and faster version of the fusion of the transformer-based SVTR with the CNN based network PP-LCNet, also from PaddlePaddle.

This model is a lighter and faster version of the fusion of the transformer-based SVTR with the CNN based network PP-LCNet, also from PaddlePaddle. -

GTC: Guided training of CTC by Attention Connectionist Temporal Classification (CTC) loss is optimized by using attention-based methods, to guide the training. As the attention module is removed during prediction no more time cost is added in the inference process.

-

TextConAug & UIM (Unlabeled Images Mining) TextConAug is a data augmentation strategy for mining textual context information. This is used to improve the training data. UIM, where images with high prediction confidence are taken as training data, is also used to enhance the training set.

In addition to this Unified Deep Mutual Learning is used, where models of different architecture are simultaneously trained as a single framework.